Manual testing of your mobile products is important and useful. However, it does not scale well, with the myriad of devices, OSs, screens and other combinations that exist on the market that your users will utilise. Automated testing really assists with the efficiency here; being able to execute the same tests repeatedly, quickly and timely on a multitude of devices.

What is Mobile Test Automation?

This is writing code and/or using tools to automate the testing of mobile software – either mobile versions/renderings of websites, or native mobile apps, for example on iOS or Android specific phones/tablets.

Why do Mobile Test Automation?

Mobile use and traffic are only increasing. In the first quarter of 2021, mobile devices (excluding tablets) generated 54.8 percent of global website traffic. In addition, with over 218 billion app downloads in 2020, having a quality mobile app is more important than ever.

Testing of mobile apps or websites, however, is complex and challenging. There are now thousands of different phones, tablets or other devices with different operating systems, screen resolutions, performance and memory requirements and limitations. Browsers have different versions as well across the devices. It’s impossible to test every combination, and even harder if doing that manually. As a result, to get the best level of quality, you want to test as many of the combinations as possible that cover a wide cross-section of your users, and since that could take quite some time, automation helps greatly for repeating the same tests on multiple devices.

Key Concepts

Mobile Automation in many respects is not dissimilar to other platforms. Your application will have components requiring testing, and that will likely consist of a front-end user interface – an app or website. There’s also a strong likelihood that this will connect to a back-end – for example, a set of microservices, or cloud-based applications/databases.

1. Scope

For the testing, your scope will need to be identified. Will it be functional only, or will other aspects like performance need to be tested as well? Is the automation focus to be on regression testing, or will it increase with each new release/user story that goes into the system under test?

2. What to Automate?

One of the key aspects of automation is deciding how much to automate. Ideally, you’d want to target the areas of most use, most change and highest risk first, and expand as you see fit. Once the coverage is identified, what about this needs to be automated should be decided – for example, just functional UI testing, or automation of API testing, as well as other performance indicators – such as automated tests to evaluate network traffic utilisation, memory management, and data storage.

3. Device Coverage

An important focus for mobile automation is what coverage of devices will have the automation running against them – the devices under test. You may find it useful to gather a cross-section of Android, iOS devices, and different mobile browsers – Firefox, Brave, Safari for example. Different screen sizes as well. However, naturally, if you’re only targeting an iOS app, or phones not tablets, you can reduce the set of devices substantially.

Use your product analytics or user research to identify the devices and mobile browsers that you need to support. Be aware that each different device can introduce bugs even with similar operating systems or browsers. Similarly, different operating system versions on the same device can behave wildly differently.

Key Inputs

First up is establishing what the automation will be running against in terms of a technology stack. Is it a simple app running solely on a device? Or a responsive website? Does it connect to backend APIs and databases? What security exists for this?

It’s important to note that some of this stack (eg the APIs, db) can be separated from the mobile automation, depending on how you build your testing framework as you may have a separate API test using a tool like Jetpack/Postman.

A useful point of input into mobile automation is in the planning and kick-off ceremonies for stories. During planning and estimation, the team can decide if the story could/should be automated, and depending on the estimation policy of the team, include this estimate in their story sizing.

At kick-off – when a developer picks up a story, the ‘three amigos’ (business/product person, developer and tester) can again discuss the story, their understanding, and what can / should be done regarding automation – eg will the developer’s unit tests be enough, should integration tests be done, or should fully automated end-to-end tests be built running on mobile devices.

Another key input for mobile automation when evaluating potential stories is the amount of time and effort it will take to automate your test, and the perceived benefits vs having the current test remain purely manual in execution. The desire to ‘just automate everything’ is often strong, but considerations to the usefulness of the specific test, the reuse of it, the stability of the code and how complex it is to automate should all be taken into consideration.

Generally, an approach to this is to inspect your analytics and determine what percentage of users would be covered with a reasonable amount of effort – perhaps the 80/20 rule as per Pareto’s Principle.

Preparation

As discussed in the inputs, each story can be looked at during a sprint and assigned a status of needing automation or not. That helps address each set of tests.

However, in preparing to start automation at all, we need to look at a framework. If we’re testing a native app, Appium is a great tool that allows you to write tests that can be executed on both iOS and Android devices. A framework can be built around this using the WebDriver protocol to send commands to each of a set of devices and report the results.

Similarly, WebDriver can be used with Selenium to run tests against a mobile browser on a desktop (change Chrome to mobile mode), or you can also use Appium to launch Safari/Chrome on the mobile device(s).

You also need to decide where these are going to be run. It’s common at the start to begin developing locally, running tests on a physical device to hand. You may have a set of devices hooked up to a local machine which then runs the tests against each handset/tablet.

You could also load a simulator or virtual machine and run tests on that, for example, through Android Studio.

As your set of devices expands, or the combinations increase, or you’re needing to test against devices/combinations that you don’t have to hand, a cloud-based system may be more appropriate. This is where a tool like BrowserStack can be used – they have over 3000+ real devices and browsers to test against and report on, avoiding the use of emulators.

Key Activities

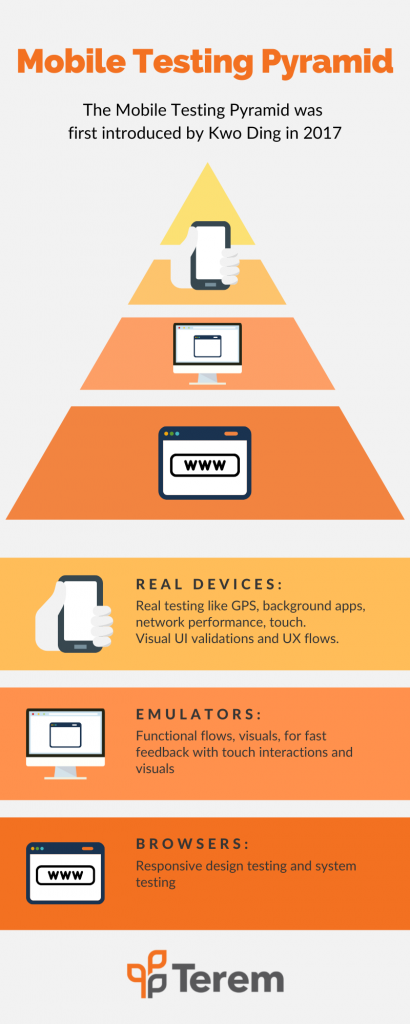

There are a lot of entry-points into mobile automation. Some are easier than others, and some are more effective than others. One common guideline is the mobile test pyramid, notably different from the standard testing pyramid (same link), in which it’s noted it’s harder to do as many low-level unit tests or API tests, and while doable, the economic return isn’t always viable. Others may consider philosophies like the testing trophy – which also incorporates static tests – may fit their culture/company goals more closely. Keep this in mind when choosing what and where to automate/test.

1. Unit Tests

From the developers’ point of view, some of the most useful feedback tests are from unit and integration tests. These are, as mentioned, sometimes more difficult when various layers or systems need to be faked or mocked just to get a unit test to function – the same for other systems but often more difficult in the less mature mobile testing world. Building these where economical and useful is still critical, and should be integrated with a CI/CD framework early on for fast feedback.

2. Front-End Testing

A lot of mobile testing focuses on what the user sees, as it’s immediately obvious and clear that it ‘needs testing’. This is also an area that can be automated relatively easily, using tools like Appium, and can be used to complete E2E tests, as in the Flipped Mobile Testing Pyramid. By automating and emulating how a user interacts with the app/website (clicks, drags, text entry etc), you’re getting a good indication of how well common tasks are possible, and this can be checked across many devices quickly with automation.

3. API Testing

Since many of the systems are often mocked for front end and unit testing, it can be more useful to write and automate separate test suites for other systems, such as APIs. A tool like Postman with Jetpack can be used to target and test the various endpoints created for these systems, confirming data, access rights, end point functionality and more, automatically.

4. Storage

This will depend if you have local (on device) or remote (cloud/network) storage – credentials, PII data, purchase/sale data and where it’s stored. You’ll want to test that the correct data is being stored, and retrieved. Sometimes this is more easily done as part of an End-to-End test, to confirm, say, signing up actually stored the new user, or as separate data integrity tests. For the former, there are ways with Selenium/Appium to do just this – check the database easily after performing user actions.

5. Network Testing

This is more important if your app is performing network operations – sending data back and forth to a server, or cloud service, where on mobile devices, users are often constrained by telco data plan limits, as well as bandwidth limitations of 3G/4G/5G services available to them. Developing automation to measure and track this usage over time for various test cases may help identify ‘leaks’ of extraneous network usage or just degradations after changes as a result of new stories or bug fixes.

6. Localisation

This area of testing, especially for the localisation of language text through an application or website lends itself to automation very well. Tests can automatically confirm any words for the language under test match a dictionary, or that only expected phrases in a list are allowed, and this form of text content matching can be performed accurately and efficiently by tests that are relatively inexpensive to write/maintain.

7. Usability/Accessibility

An oft-forgotten aspect of testing is usability testing, of the UX and for differently-abled users – for example, vision-impaired users who may be using uncommon software to access your tool, like a screen reader. This may sound like a complicated extra step, but some of this can actually be automated, including checking aspects of WCAG 2.0 (Web Content Accessibility Guidelines). Tools like WAVE can perform this automatically on websites/some apps, and can help you identify small and often easy fixes that really help some of your sometimes underserviced users.

8. Execution of Testing

Ideally, automation is running regularly, and automatically. A perfect way is to integrate it with CI/CD pipelines and have it executed after each commit to chosen branches, as well as after say, a release label is created as part of regression testing. DevOps/QA best practice is to run early, and run often.

Technical Challenges Specific to Mobile Automation

Web browsers in many ways were designed for stationary devices, with large memory spaces and plenty of power. Mobile devices on the other hand are on the move, the apps on them have more stringent requirements in terms of memory, CPU, network, as well as a lot of different operating systems, screen sizes, and interactions.

- Memory Limitations: Many mobile devices are limited by their memory and storage space. The sizes and demands of web browsers, even on a mobile device can slow performance, and apps need to be tested to see if they’ll challenge the variety of devices’ limitations.

- Application Types: Some web applications could be using a variety of versions of HTML, CSS, JS, and apps could be Java or Objective-C or Swift applications, or some mixture of all. A plan is required to try for a range of devices with combinations of browsers, OSs, and sizes.

- Network: On a desktop, or on a mobile device in your office, the network may be very performant, with low latency and next to no packet loss. Out on the street, however, the users may have no wifi, spotty internet, could be on a plane with zero internet access, or on a network with no outside access. These combinations need to be checked to see how the application performs in these different environments.

Outputs

Generally, post execution, you’ll produce (ideally automatically):

- A set of test results, including pass / fails, tests run, which ones had issues etc

- Metrics like coverage, network usage (potentially especially important on mobile)

- Trend statistics – eg pass/fail over time

- Screenshots of errors, failures

- Logs of test runs, which will help for diagnosing issues

And as a result of this, defect reports can be raised by anyone investigating or analysing the above outputs.

Further Reading

Articles

- Mobile Automation Testing Steps and Process

- Top 10 Automation Testing Tools for Mobile Applications

- Setting up Appium on Windows and Ubuntu

Videos

Books

Templates

Mark Mayo

Senior Quality Engineer

Mark Mayo is a Senior Quality Engineer at Terem. He has nearly two decades of software development and quality assurance experience across a variety of industries over four countries, including aviation, networking, real estate, travel, video games and banking.

LinkedIn: linkedin.com/in/mark-mayo